This post has moved to eklausmeier.goip.de/blog/2020/07-07-asrock-deskmini-a300m-with-amd-ryzen-3400g.

Below are some photographs during assembly of the Asrock A300M with an AMD Ryzen 5 Pro 3400G processor.

The Asrock web-site detailing the specs of the A300M: DeskMini A300 Series.

Three noticable reviews on the A300M:

- Anandtech has a very readable review of the A300M: Home> Systems The ASRock DeskMini A300 Review: An Affordable DIY AMD Ryzen mini-PC

- A short review from Techspot: Asrock DeskMini A300 Review.

- A German review with many photos during assembly: ASRock DeskMini A300 mit AMD Ryzen 5 2400G im Test.

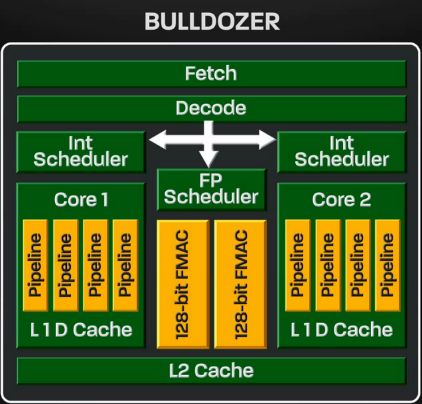

1. CPU. Three photos from the AMD 3400G CPU:

2. Power. The power supply of the A300M will provide at most 19V x 6.32A = 120W.

3. Dimensions. The case has volume of at most two liters, exemplified by the two milk cartons.

4. Mounting. CPU mounted on motherboard.

5. Temperature. I installed a Noctua NH-L9a-AM4 cooler. Running the AMD at full speed with full load shows the following temperature using command sensors:

amdgpu-pci-0300

Adapter: PCI adapter

vddgfx: N/A

vddnb: N/A

edge: +85.0°C (crit = +80.0°C, hyst = +0.0°C)

k10temp-pci-00c3

Adapter: PCI adapter

Vcore: 1.23 V

Vsoc: 1.07 V

Tctl: +85.2°C

Tdie: +85.2°C

Icore: 100.00 A

Isoc: 9.00 A

nvme-pci-0100

Adapter: PCI adapter

Composite: +57.9°C (low = -0.1°C, high = +74.8°C)

(crit = +79.8°C)

nct6793-isa-0290

Adapter: ISA adapter

in0: 656.00 mV (min = +0.00 V, max = +1.74 V)

in1: 1.86 V (min = +0.00 V, max = +0.00 V) ALARM

in2: 3.41 V (min = +0.00 V, max = +0.00 V) ALARM

in3: 3.39 V (min = +0.00 V, max = +0.00 V) ALARM

in4: 328.00 mV (min = +0.00 V, max = +0.00 V) ALARM

in5: 216.00 mV (min = +0.00 V, max = +0.00 V) ALARM

in6: 448.00 mV (min = +0.00 V, max = +0.00 V) ALARM

in7: 3.39 V (min = +0.00 V, max = +0.00 V) ALARM

in8: 3.30 V (min = +0.00 V, max = +0.00 V) ALARM

in9: 1.84 V (min = +0.00 V, max = +0.00 V) ALARM

in10: 256.00 mV (min = +0.00 V, max = +0.00 V) ALARM

in11: 216.00 mV (min = +0.00 V, max = +0.00 V) ALARM

in12: 1.86 V (min = +0.00 V, max = +0.00 V) ALARM

in13: 1.71 V (min = +0.00 V, max = +0.00 V) ALARM

in14: 272.00 mV (min = +0.00 V, max = +0.00 V) ALARM

fan1: 0 RPM (min = 0 RPM)

fan2: 2626 RPM (min = 0 RPM)

fan3: 0 RPM (min = 0 RPM)

fan4: 0 RPM (min = 0 RPM)

fan5: 0 RPM (min = 0 RPM)

SYSTIN: +97.0°C (high = +0.0°C, hyst = +0.0°C) sensor = thermistor

CPUTIN: +87.5°C (high = +80.0°C, hyst = +75.0°C) ALARM sensor = thermistor

AUXTIN0: +62.0°C (high = +0.0°C, hyst = +0.0°C) ALARM sensor = thermistor

AUXTIN1: +94.0°C sensor = thermistor

AUXTIN2: +90.0°C sensor = thermistor

AUXTIN3: +86.0°C sensor = thermistor

SMBUSMASTER 0: +85.0°C

PCH_CHIP_CPU_MAX_TEMP: +0.0°C

PCH_CHIP_TEMP: +0.0°C

PCH_CPU_TEMP: +0.0°C

intrusion0: OK

intrusion1: ALARM

beep_enable: disabled

Fully loaded:

1 [|||||||||||||||||||||||||||||||||||||||| 52.9%] Tasks: 120, 405 thr; 5 running

2 [||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||99.4%] Load average: 7.28 6.82 6.02

3 [||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||99.4%] Uptime: 8 days, 07:27:51

4 [||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||94.1%]

5 [|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||100.0%]

6 [|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 88.2%]

7 [||||||||||||||||||||||||||||||||| 44.2%]

8 [||||||||||||||||||||||||||||||||||||||| 50.6%]

Mem[||||||||||||||||||||||||||||||||||||||||||||||||||||||| 28.7G/60.8G]

Swp[ 0K/0K]

Added, 22-Aug-2020: I noticed that the A300M motherboard has a very significant clock lag. So you are requried to run ntpdate or timesyncd.

You must be logged in to post a comment.