This post has moved to eklausmeier.goip.de/blog/2021/02-28-analysis-and-usage-of-sshguard.

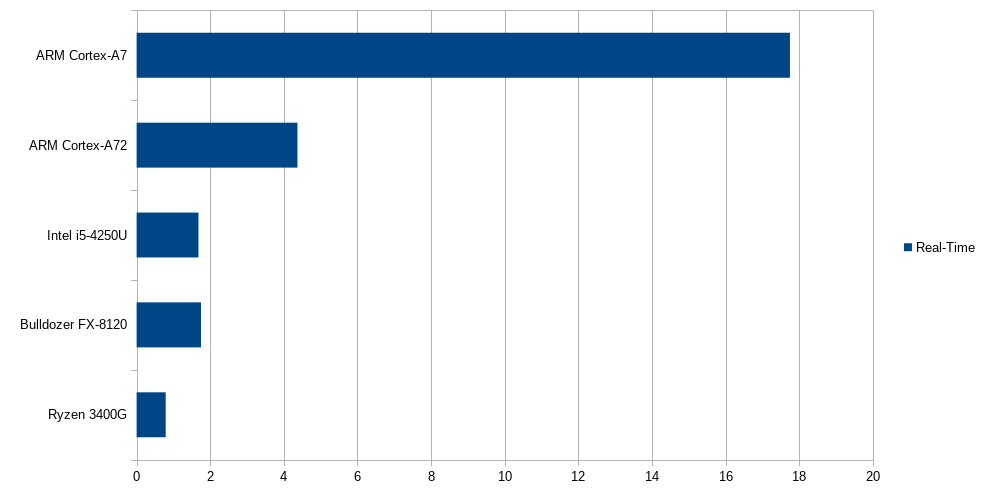

To ban annoying ssh access to your Linux box you can use fail2ban. Or, alternatively, you can use SSHGuard. SSHGuard’s installed size is 1.3 MB on Arch Linux. Its source code, including all C-files, headers, manuals, configuration, and makefiles is 8 KLines. In contrast, for fail2ban just the Python source code of version 0.11.2 is 31 KLines, not counting configuration files, manuals, and text files; its installed size is 3.3 MB. fail2ban is also way slower than SSHGuard. For example, one one machine fail2ban used 7 minutes of CPU time, where SSHGuard used 11 seconds. I have written on fail2ban in “Blocking Network Attackers“, “Chinese Hackers“, and “Blocking IP addresses with ipset“.

SSHGuard is a package in Arch Linux, and there is a Wiki page on it.

1. Internally SSHGuard maintains three lists:

- whitelist: allowed IP addresses, given by configuration

- blocklist: list of IP addresses which are blocked, but which can become unblocked after some time, in-memory only

- blacklist: permanently blocked IP addresses, stored in cleartext in file

SSHGuard’s main function is summarized in below excerpt from its shell-script /bin/sshguard.

eval $tailcmd | $libexec/sshg-parser | \

$libexec/sshg-blocker $flags | $BACKEND &

wait

There are four programs, where each reads from stdin and writes to stdout, and does a well defined job. Each program stays in an infinite loop.

$tailcmd reads the log, for example via tail -f, which might contain the offending IP addresssshg-parser parses stdin for offending IP’ssshg-blocker writes IP addresses$BACKEND is a firewall shell script which either uses iptables, ipset, nft, etc.

sshg-blocker in addition to writing to stdout, also writes to a file, usually /var/db/sshguard/blacklist.db. This is the blacklist file. The content looks like this:

1613412470|100|4|39.102.76.239

1613412663|100|4|62.210.137.165

1613415749|100|4|39.109.122.173

1613416009|100|4|80.102.214.209

1613416139|100|4|106.75.6.234

1613418135|100|4|42.192.140.183

The first entry is time in time_t format, second entry is service, in our case always 100=ssh, third entry is either 4 for IPv4, or 6 for IPv6.

SSHGuard handles below services:

enum service {

SERVICES_ALL = 0, //< anything

SERVICES_SSH = 100, //< ssh

SERVICES_SSHGUARD = 110, //< SSHGuard

SERVICES_UWIMAP = 200, //< UWimap for imap and pop daemon

SERVICES_DOVECOT = 210, //< dovecot

SERVICES_CYRUSIMAP = 220, //< cyrus-imap

SERVICES_CUCIPOP = 230, //< cucipop

SERVICES_EXIM = 240, //< exim

SERVICES_SENDMAIL = 250, //< sendmail

SERVICES_POSTFIX = 260, //< postfix

SERVICES_OPENSMTPD = 270, //< OpenSMTPD

SERVICES_COURIER = 280, //< Courier IMAP/POP

SERVICES_FREEBSDFTPD = 300, //< ftpd shipped with FreeBSD

SERVICES_PROFTPD = 310, //< ProFTPd

SERVICES_PUREFTPD = 320, //< Pure-FTPd

SERVICES_VSFTPD = 330, //< vsftpd

SERVICES_COCKPIT = 340, //< cockpit management dashboard

SERVICES_CLF_UNAUTH = 350, //< HTTP 401 in common log format

SERVICES_CLF_PROBES = 360, //< probes for common web services

SERVICES_CLF_LOGIN_URL = 370, //< CMS framework logins in common log format

SERVICES_OPENVPN = 400, //< OpenVPN

SERVICES_GITEA = 500, //< Gitea

};

2. A typical configuration file might look like this:

LOGREADER="LANG=C /usr/bin/journalctl -afb -p info -n1 -t sshd -o cat"

THRESHOLD=10

BLACKLIST_FILE=10:/var/db/sshguard/blacklist.db

BACKEND=/usr/lib/sshguard/sshg-fw-ipset

PID_FILE=/var/run/sshguard.pid

WHITELIST_ARG=192.168.178.0/24

Furthermore one has to add below lines to /etc/ipset.conf:

create -exist sshguard4 hash:net family inet

create -exist sshguard6 hash:net family inet6

Also, /etc/iptables/iptables.rules and /etc/iptables/ip6tables.rules need the following link to ipset respectively:

-A INPUT -m set --match-set sshguard4 src -j DROP

-A INPUT -m set --match-set sshguard6 src -j DROP

3. Firewall script sshg-fw-ipset, called “BACKEND”, is essentially:

fw_init() {

ipset -quiet create -exist sshguard4 hash:net family inet

ipset -quiet create -exist sshguard6 hash:net family inet6

}

fw_block() {

ipset -quiet add -exist sshguard$2 $1/$3

}

fw_release() {

ipset -quiet del -exist sshguard$2 $1/$3

}

...

while read -r cmd address addrtype cidr; do

case $cmd in

block)

fw_block "$address" "$addrtype" "$cidr";;

release)

fw_release "$address" "$addrtype" "$cidr";;

flush)

fw_flush;;

flushonexit)

flushonexit=YES;;

*)

die 65 "Invalid command";;

esac

done

The “BACKEND” is called from sshg-blocker as follows:

static void fw_block(const attack_t *attack) {

unsigned int subnet_size = fw_block_subnet_size(attack->address.kind);

printf("block %s %d %u\n", attack->address.value, attack->address.kind, subnet_size);

fflush(stdout);

}

static void fw_release(const attack_t *attack) {

unsigned int subnet_size = fw_block_subnet_size(attack->address.kind);

printf("release %s %d %u\n", attack->address.value, attack->address.kind, subnet_size);

fflush(stdout);

}

SSHGuard is using the list-implementation SimCList from Michele Mazzucchi.

4. sshg-parser uses flex (=lex) and bison (=yacc) for evaluating log-messages. An introduction to flex and bison is here. Tokenization for ssh using flex is:

"Disconnecting "[Ii]"nvalid user "[^ ]+" " { return SSH_INVALUSERPREF; }

"Failed password for "?[Ii]"nvalid user ".+" from " { return SSH_INVALUSERPREF; }

Actions based on tokens using bison is:

%token SSH_INVALUSERPREF SSH_NOTALLOWEDPREF SSH_NOTALLOWEDSUFF

msg_single:

sshmsg { attack->service = SERVICES_SSH; }

| sshguardmsg { attack->service = SERVICES_SSHGUARD; }

. . .

;

/* attack rules for SSHd */

sshmsg:

/* login attempt from non-existent user, or from existent but non-allowed user */

ssh_illegaluser

/* incorrect login attempt from valid and allowed user */

| ssh_authfail

| ssh_noidentifstring

| ssh_badprotocol

| ssh_badkex

;

ssh_illegaluser:

/* nonexistent user */

SSH_INVALUSERPREF addr

| SSH_INVALUSERPREF addr SSH_ADDR_SUFF

/* existent, unallowed user */

| SSH_NOTALLOWEDPREF addr SSH_NOTALLOWEDSUFF

;

Once an attack is noticed, it is just printed to stdout:

static void print_attack(const attack_t *attack) {

printf("%d %s %d %d\n", attack->service, attack->address.value,

attack->address.kind, attack->dangerousness);

}

5. For exporting fail2ban’s blocked IP addresses to SSHGuard one would use below SQL:

select ip from (select ip from bans union select ip from bips)

to extract from /var/lib/fail2ban/fail2ban.sqlite3.

6. In case one wants to unblock an IP address, which got blocked inadvertently, you can simply issue

ipset del sshguard4 <IP-address>

in case you are using ipset as “BACKEND”. If this IP address is also present in the blacklist, you have to delete it there as well. For that, you must stop SSHGuard.

You must be logged in to post a comment.